Digital Photography 001

Under the hood of a digital camera

Page 1 of 1. Version 2.0, ©2010, 2013 by Dale Cotton, all rights reserved.

As film and film cameras become less prevalent even in the developing world, digital photography is well on its way to becoming the new standard. If you are one of the thousands of people switching from film to digital each year, if you have even a passing knowledge of how to use a personal computer, and if like me you're the sort of person who hates being in the dark but instead has to know what's going on, then this introduction was written for you. This is what your manual never told you, and everything applies equally to digital cameras from cell phone to dSLR.

Recording brightness

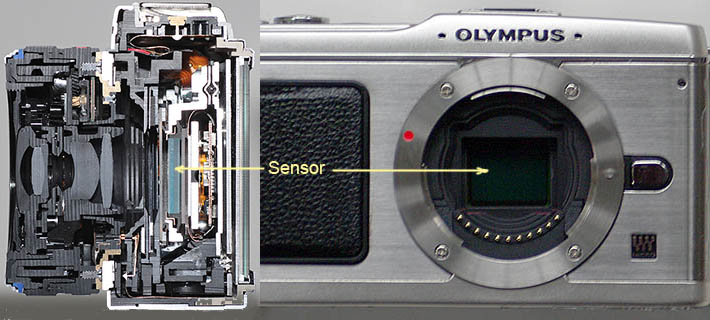

Fig. 1: Typical image sensor (cut-away: dc.watch)

Images are captured by focusing light via the same lens/optical systems developed for film photography but focusing it onto something called a light sensor (the green rectangle in Fig. 1) instead of light-sensitive film. A camera's light sensor is a rectangle of mostly silicon, made up of millions of microscopic electronic circuits called photodiodes arranged in tidy rows and columns. A photodiode generates electricity when exposed to light via the magic of the photoelectric effect. The more light it is exposed to, the more electricity it produces. Measuring the electrical charge of each of the millions of photodiodes in a camera sensor is how a digital photograph is created. It's that simple.

Does technical jargon make you nervous? Photodiode sounds difficult but it's just three common Greek words strung together. Photos = light, di = two, hodos = path. A diode is an electrical component with two electrical connections; a photodiode is a special kind of diode that does the electrical thing but also responds to light.

Fig. 2: Analogue-to-digital converter chip

The photodiode sensor turns light into electricity – the more light, the more electricity – but how to convert a certain amount of electricity into a digital value? A complex component called the analogue-to-digital converter, or ADC, compares the actual charge of a given photodiode to its maximum possible charge, then expresses that ratio as a digital number, known as a pixel value (picure element). A pixel value gives us the brightness recorded by (usually) a single photodiode on the scale of pure black to pure white. The ADC can arbitrarily translate a given maximum electrical charge into most any number of steps; the question is how many?

Digital 123s. A bit is the fundamental unit of information in digital technology, including computers, digital cameras, and digital audio. A bit is on or off, dah or dit, strong signal or weak signal, 1 or 0, which is why digital is known as a binary system (counting by twos). One bit can store two values, two bits can store four values (00, 01, 10, 11), three bits can store eight values, etc.

The next most common digital storage unit is the byte, which is eight bits or 2x2x2x2x2x2x2x2 = 256 values. Translated from binary arithmetic to decimal arithmetic, that gives us the integers 0 to 255. For example, the byte for 13 is 0000 1101. Because computer memory was standardized on the byte since the beginning, it takes one byte to store any number from 0 to 255 (0000 0000 = 0 and 1111 1111 = 255). But it takes two bytes to store the number 256, because the first byte is full at 255. If you have baskets that can only hold eight apples, if you want to store nine apples you need two baskets.

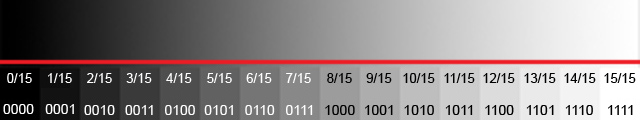

Fig. 3: Continuous/analogue brightnesses (top) vs. 16-step digitized (bottom)

Turns out the human visual system can at best distinguish about 1000 distinct shades of brightness between pure black and pure white (far more than the mere sixteen distinctions shown along the bottom of Fig. 3). 1000 is an awkward number in binary, since one byte can store 256 distinctions and two bytes can store 65536 distinctions; 1000 fits nicely into ten bits (1024 distinctions), but is too big for one byte (8 bits) of digital storage and much too small for two bytes (16 bits) of digital storage. More on this in below.

Fig. 4: Flash memory card

Having translated brightness into appropriate numbers, a new problem arises: A typical camera sensor contains millions of photodiodes, so how to store the resulting millions of pixel values in a single picture? The obvious thing to do is to use the same digital memory technology already in production for computers. One of many such memory technologies is the flash card. Flash is relatively expensive, but unlike the RAM chips used in desktop computers, flash technology retains information without needing a continuous supply of electricity.

Recording colour

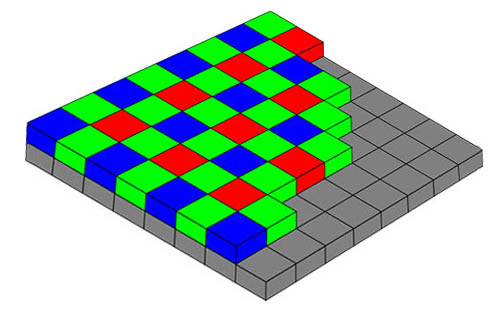

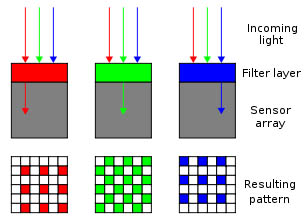

Fig. 5: Bayer filter array (modified from Wikipedia)

So far, there is no such thing as a light sensor that can directly sense colour. To a camera sensor, no matter whether CCD or CMOS, a rainbow is shades of grey. To capture colour information we have to resort to trickery. We've all seen coloured cellophane; a piece of hard candy might be wrapped in red or green transparent cellophane. If you hold a piece of red cellophane over one eye and close the other eye, you're seeing the world through a red filter – only red light can get through (looking at the world through those proverbial rose-coloured glasses). If you put a red filter over one photodiode, a green filter over a neighbouring photodiode, and a blue filter over a third photodiode, you can combine the signal strengths from the three photodiodes to specify any colour the eye can see, whether red, orange, yellow, mauve, purple, or whatever. So each of the millions of microscopic photodiodes within the postage-stamp-sized area of a light sensor has an equally microscopic red, green, or blue filter over it. The particular pattern of red, green, and blue filters is called the Bayer pattern, and is pretty much standardized in the photographic industry. (For technical reasons, the Bayer pattern actually uses two greens for each red and blue.)

Fig. 6: Bayer filter concept (Wikipedia)

There are a couple downsides to this trickery, however. For one thing, no one pixel records a full hue value. For another, colour filters cut out a certain portion of the in-coming light: red cuts out 70%, blue cuts out 90%, and green cuts out 40%, for an average of 60% loss of brightness. (Transmittance values, to be exact, are: 29.8839% for red, 58.6811% for green and 11.4350% for blue.)

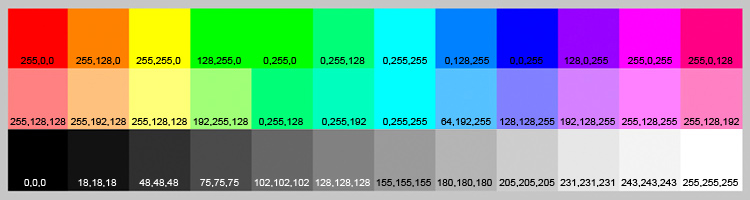

Fig. 7: RGB triplets for several pure colours (modified from Bill Atkinson)

The upshot of all this is that each pixel in an image file typically contains a one byte number for red, a one byte number for green, and a one byte number for blue. We express this in human terms as an RGB triplet, such as [255, 255, 255] for pure white and [0, 0, 0] for pure black. A few more examples: the triplet [255, 0, 0] represents pure red; the triplet [128, 128, 128] is a medium grey. But each of the three digits can be any number from 0 to 255, so any random triplet, such as [137, 14, 248] represents one of the 16,777,216 possible 3-byte hues, whether or not we have a name for it, and even whether or not it actually exists (since the human visual system can only distinguish about 10 million of the 16.7 million RGB values).

There is a mismatch between the fact that humans can distinguish 1000 shades of brightness (luminance) and 10,000,000 different hues. Logic suggests that ideally we would want our RGB triplets to cover [0-999, 0-999, 0-999]. This would allow us to specify 1 billion hues, which is 100 times as many as we can actually distinguish. This paradox is resolved once you take into account that the brightnesses of red, green, and blue overlap.

Image files

Fig. 7: DIGIC 4 is the signal processor chip used in a recent generation of Canon cameras (Canon)

The analogue-to-digital converter digitizes the output of each photodiode in the sensor. Yet another chip, called a signal processor, grabs all the digital numbers from the converter, works some more technical wizardry on those numbers, then spits them out as an image file for storage on the flash memory card.

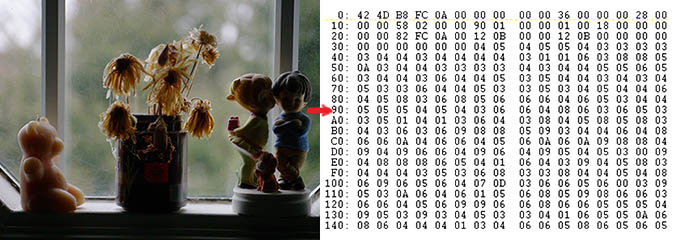

Fig. 9: Digital images are all numbers under the hood

Let's say we have a 12 megapixel digital camera with the pixels arranged in 3000 rows of 4000 pixels each. To record this as a series of RGB triplets, we would write a file with the first pixel's triplet, followed by the second pixel's triplet, all the way to the 12 millionth pixel's triplet, which is 36 million bytes of data. The only show-stopper with this method is that to open or read the file and turn it into a picture you also have to know at a minimum that each row is 4000 pixels long, since other ratios beside 3 to 4 are in common use. You could also store the numbers as 12 million R bytes, followed by 12 million G bytes, followed by 12 million B bytes.

The convention here is to record some initial information, called meta data, at the beginning of an image file, then follow that with the long string of RGB numbers. Since some meta data has to be recorded to specify which arrangement has been used for RGB storage, camera companies quickly found it useful to throw in lots of other info, including the time and date the image was recorded, the camera model name, focal length, aperture, and shutter speed. Over time a particular convention for the layout of this meta data arose called EXIF (exchangeable image file), and a particular class of programs called EXIF readers now exist to ferret out and display this information. Since the header information can be variable length, a key part of the header is a number, called the offset, that indicates where the header ends and the actual RGB data begins.

BMP

What we've described so far very much resembles the BMP or (bitmap image file type. BMP is the simplest format to read and write but is not in common use because it takes up so much storage space. The image file from a 12 mp camera would take up more than 36 megabytes of storage if saved as a standard BMP file. Early on, programmers attempted to address this problem by employing a bit of cleverness. If you look at the RGB triplets in a typical image file you'll see long strings of repeats scattered about. A blue sky varies subtly and gradually in shade from one shade of sky blue to another, but repeats of the exact same shade – perhaps [168, 185, 233] – will be rife. So instead of writing the repeats out one after the other, a modified version of the BMP file format allowed them to be written as [repeat count, RGB] pairs (called run length encoding or RLE). So a portion of a BMP file in which eleven pixels in a row are all the same sky blue colour would contain the digital equivalent of 11 x [168, 185, 233]. (A complete description of the BMP format can be found on SourceForge.) BMP files typically end with the .bmp extension.

TIFF and JPEG

While the BMP format is relatively easy to understand, it has long since been superceded by more technically sophisticated file formats more suited to digital photographic image information. TIFF (tagged information file format) started out with good intentions as a universal public domain image format with what were then all the latest bells and whistles. But various graphics app developers modified the format to address their own needs, quickly degenerating the standard into a visual Tower of Babel. TIFF files generally end with the .tif extension.

As cameras evolved with ever-larger numbers of megapixels and as motion picture capture entered the scene, the problem of file size quickly turned into a crisis. To address this problem the radically new JPEG (Joint Photographic Experts Group) format entered the scene in 1992, employing a logic that actually throws away data to allow cameras and software to reduce file sizes to any extreme desired with as little image degradation as possible. (Compression that throws away data is called lossy, as opposed to lossless compression which retains all data.) JPEG files generally end with the .jpg extension.

JPEG is the format that has become the default for camera output and for image exchange on personal computers, e-mail, and the Internet. TIFF, on the other hand, is frequently used by graphics professionals who are willing to trade its greater file sizes per image for the fact that data remains undegraded by lossy compression. TIFF files cannot be viewed in web browsers and e-mail apps.

Other formats. There have been many other image file formats in use. In the 1980s the GIF (Graphic Image File) format was introduced by CompuServe and was nearly universal until it became mired in a patent dispute. It had several upsides for web use but also the serious limitation of allowing only 256 colours per image, rendering it pretty much useless for photographs. An open source and improved competitor to GIF ensued, called PNG (Portable Network Graphics). PNG files cannot be compressed to as small sizes as JPEG files so are not in wide use. Yet another format in wide use is PSD (Photoshop Document). This is Adobe's proprietary image file format used by Photoshop. File sizes tend to be very large compared to JPEG, so PSD is not supported by web browsers. Interestingly, Adobe itself now recommends Photoshop users use TIFF instead of PSD, now that TIFF is fully mature.

Raw

More expensive cameras often have the option of saving images in yet another file format, called raw. Raw is actually not a single format but an entire class of formats with certain elements in common. Raw files contain a typical meta data section to start, like any other image format, but this is followed not by RGB triplets, but by the brightness numbers recorded by each photodiode in the sensor, one after the other. Since there is only one number (which can be either one or two bytes in length), not three, for each pixel, this is a fairly compact lossless file format, and lossless compression can be applied in the camera to further reduce file sizes. The downside of raw is that each camera model's raw format is unique and proprietary, so raw files cannot simply be viewed out of the camera by general-purpose graphics apps like web browsers and e-mail packages. Instead, raw files must be converted into a more universal format such as JPEG, for viewing. Raw files end with a variety of extensions, such as .orf, .nef, and .crf, each extension being unique to a particular camera company.

The specialized apps that convert raw files are called raw converters. A further upside to using raw files is that often raw converters running on a desktop computer can produce superior results to what the camera itself can do within the severe constraints of limited processing power and near-instantaneous time constraints.

File names: a niggly point that gets novices into trouble is the difference between file naming conventions on different computers. Windows computers allow very long file names but disallow a few reserved punctuation marks, namely < > : " / \ | ? *. Macintosh computers have similar restrictions. Unix computers have even more restrictions, especially in not permitting spaces in file names. Since the Internet is run on Unix computers this becomes a serious problem when uploading files from a personal computer to the Internet. The upshot is that it's safest to name your image files with no spaces and no other punctuation except the underline and the hyphen.

Putting it all together

Fig. 10: Digital photographers enjoying their cameras

A digital camera is actually a small portable computer with a lens and sensor attached to it. Hopefully, now that you have a better understanding of the fundamentals of what's going on under the hood, you'll be able to avoid getting lost in a sea of technical confusion and simply enjoy your camera like the two fellows in Fig. 9.

Further reading

How Digital Cameras Work by Jerry Lodriguss (excellent for digging deeper)

Camera Fundamentals (how cameras in general work)